Advanced tracking of agent and grader performance

Advanced tracking of agent performance lets you pinpoint those agents whose behavior can and should be improved. In order to do this, a score is created out of multiple items related to:

-

their way each call is handled (e.g call duration)

-

their call performance (e.g. number of sales)

-

QA scores that were given to their calls

As the scoring is in itself quite complex and made up of multiple factors, scoring is based on a rule set that represent a business-specific set of targets that should be met. For each rule, you have two possible levels of non-compliance, that is a yellow and a red threshold. Each threshold can, in turn, have a peculiar score associated.

| For example, you could say that the expected call duration is 100 seconds; calls that are between 100 and 150 seconds are "yellow" and worth 1 review point, calls that are over 150 seconds are "red" and worth 4 review points. The higher your review score, the more prominently the agent will be displayed. |

When applying a rule set to a set of calls, you get a score expressed in review points for each agent selected that represents the sum of all anomalies as detected by the chosen ruleset.

The system then displays the agents involved in reverse score order, prompting the grader to investigate further by accessing the set of calls and the set of QA records and the relevant audio recordings.

The result of this activity is:

-

In-depth knowlegde of agent performance

-

Agent life cycle management: the grader can move agents between agent groups, so that you can manage a process where an agent belongs to multiple skill groups during their lifetime

-

Continuous improvement of agent performance through agent tasks, e.g. coaching sessions, or completing Commputer Based Training to improve the agent’s skills.

For example, an agent could start her life as member of the group 'New Hires'. When reviewed after a while, she could be moved to 'New Hires Probation' when she is found lacking in some subject. After a while she could be checked again and moved back to 'New Hires'.

As collateral features, the system also offers facilities to:

-

Create rule sets based on the average properties of a set of calls. This makes it easy to have reference points that can then be manually edited.

-

Track the lifecycle of agents. This is done by tracking the different agents groups each agent has been a member of and the time period they have been there.

Just like for agents, there is also the problem of comparing graders to each other, in order to have a "fair" view of what is going on and to make sure that grading happens under the company’s guidelines and not each grader’s own preferences. Grader calibration reports fulfill this purpose by comparing graders to each other.

Tracking agent performance

For users holding the key "QA_PERF_TRACK" a new link appears in the QueueMetrics home page, as shown below:

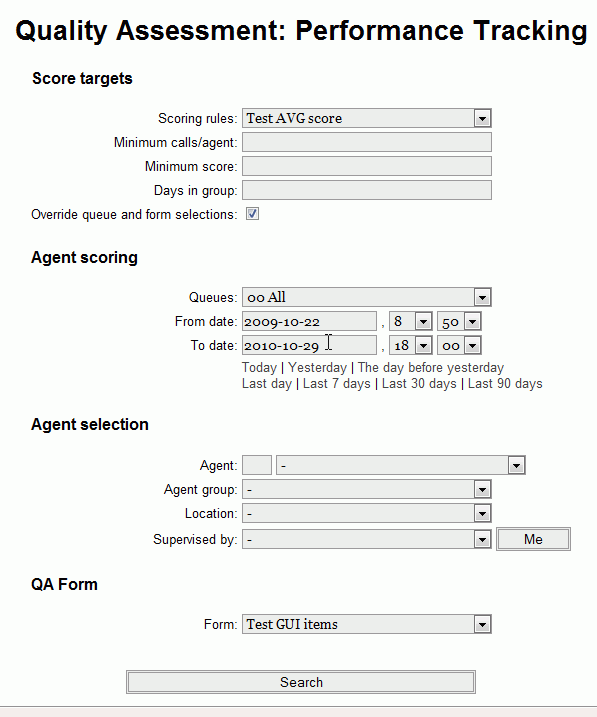

When clicking on it, you are lead to the main search page:

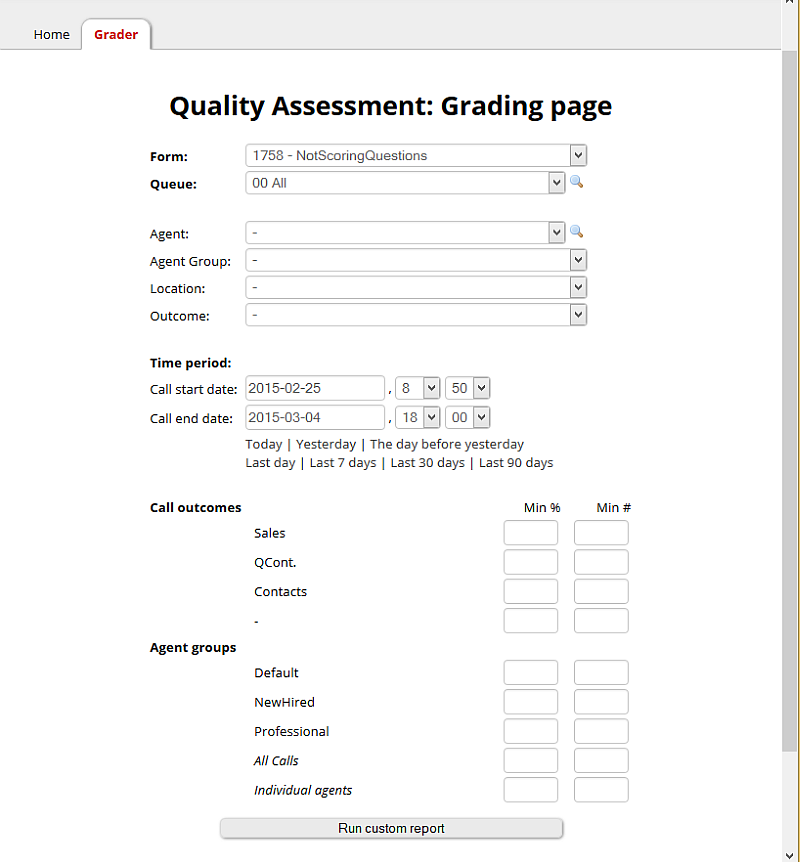

This page lets the grader search for a set of agents to be reviewed. This requires setting three search dimensions:

-

A queue (or set of queues) and a time period

-

A way to search for a set of agents (a specific one, or a group, or a location, or all agents that have the same supervisor).

-

A QA form to be graded

-

A rule set that applies to the above search and defines scoring. You should define your own before you start this activity (see Defining agent performance rules).

The scoring rule is usually associated to a particular queue and form but the user can override this selection by checking the option "Override queue and form selections" and by specifying other parameters that affect the calculation, like:

-

the minimum expected score,

-

the minimum number of calls that should be analyzed,

-

the minimum days the agent had to be in the group at the run period specified for the analysis.

These minimums are to avoid considering agents that are undersampled (e.g. if an agent has been scored only once, we can expect this score to be less meaningful compared to an agent whose score is based on 10 elements).

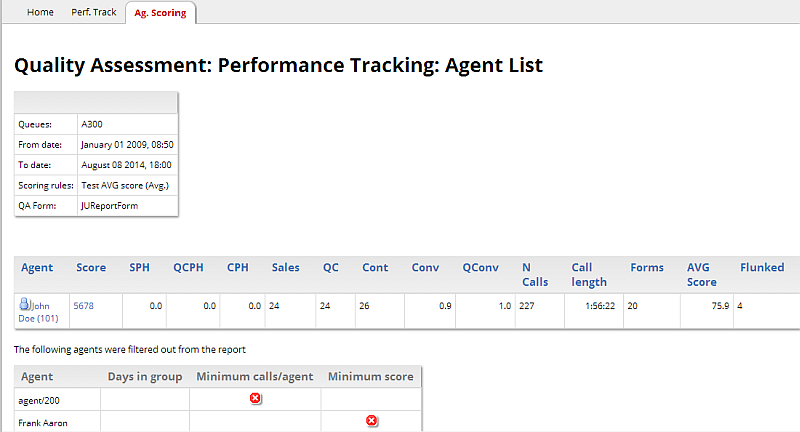

The button "Search" starts the calculation process and a new page will be displayed:

The items shown here are averages on all the calls that were found in the current set. The selected score rule is used to compute the overall Score value, and agents are shown sorted by their score in descending order.

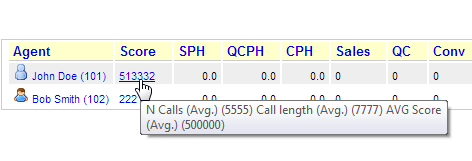

Flying with the mouse over the score value a detail rules contributing to the overall score are shown, as reported on the picture below:

At the bottom of the main table result, a second table shows the agents (if any) that were not included in the report and the threshold that was not met.

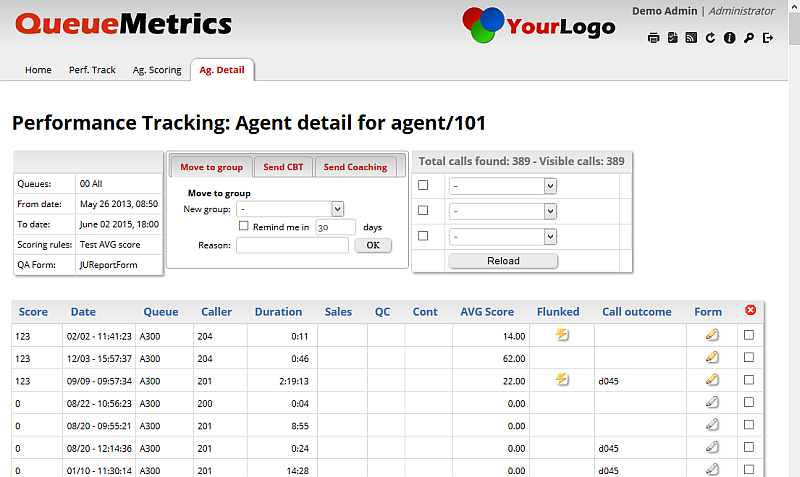

Clicking on the agent name or the associated score value the user gives access to details. They are reported in a different page, like the one shown below.

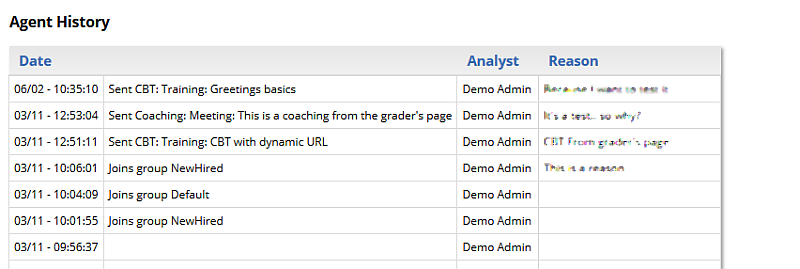

The details page is split in two parts. The top part reports the score details for each call the agent answered. The bottom one shows the detailed history associated to that particular agent.

Each line in the top table reports the score calculated by the rule selected in the search page without being averaged, and other relevant information for each call.

An icon representing a pencil is shown if the call has a QA form associated with it; by clicking it, the associated QA form will be shown in a separate pop-up dialog. Users allowed to grade calls could find a set of grayed out pencil icons to be used to score new calls from this page. After scoring each call they should press "Refresh" to have the page updated.

A special icon is assigned to Flunked calls. A call is defined as Flunked if the related QA Form has been graded but reached an average value below the Issue level.

Dynamic drill down

To make the grader’s life easier in case the set of calls to be analyzed is large, it is possible to use a set of dynamic criteria to reduce the data that is currently displayed.

The table on the top-right lets you add up to three drill-down rules being active at the same time. Rules can be quickly disabled by clicking on the "Turn off" checkbox on the left of each rule.

| The title of the section shows the total number of calls found for the agent and the number that is actually displayed. The ones that are not are the ones that are filtered out. In order to see them all again, just clear or turn off all the filters. |

The following rules are defined:

-

Call length

-

A form’s average score

-

The performance tracker score

-

The call outcome

-

Whether the call is a Flunked one, or a Sale, or a Qualified Contact, or a Contact

Special notes about rules:

-

If you enter multiple rules at once, they are all active

-

Rules expecting a time duration (e.g. call length) will accept input as H:MM:SS (e.g. 0:23) or an integer number of seconds.

-

Rules working on a float value have the float value converted to the nearest integer and then the rule is applied.

It is always possible to sort data in the call list table by clicking on the title.

Taking remedial actions

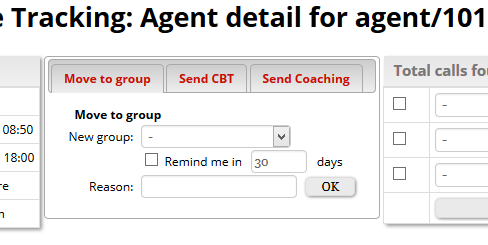

By the top of the page, the grader can take remedial actions using the form displayed below.

Move the agent to a different group

In order to move the agent to a different group, the grader has to select the new group through the dropdown; he can specify a reason in the lower text box then press the OK button on the right side of the dropdown group.

If the user checks the "Remind me" checkbox before pressing the OK button, QueueMetrics will send a reminder task to the grader himself that will be displayed after a specified number of days. (This can be used as a reminder and is optional).

A new row with the operation details will be inserted in the agent’s history table after completion.

Send a CBT to the selected agent

In order to send a CBT (Computer Based Training) to the specific agent the report is referring to, the grader should select an already known CBT from the dropdown list or manually specify a URL. An optional note (visible to the recipient agent) and an optional reason (hidden to the agent) can be added. Grader can optionally link a specific call with the CBT to be sent, so, the agent will be able to refer to that particular call in the task details. To link a specific call to the CBT, the grader should click on the checkbox he can find each row in the call list. Unlinking the call is done by clicking on the red icon in the list header.

A new 'Teaching' task will be sent to the agent with the title and the inserted URL.

A new row with the operation details will be placed in the history table after completion.

Send a Coaching (Meeting) task to the selected agent

A grader may want to send a Coaching/Meeting task to the specific agent the report is referring to. This can be done through the Send Coaching tab. A Coaching task is defined by a Title, a Message and a task date. The grader can optionally specify a coaching duration (in seconds), a note (visible to the agent) and a reason text (hidden to the agent). Pressing OK generates a new coaching task with the information provided. The task will be placed in the history table after completion.

Finding calls to be graded

This is a separate page accessed by users holding the QA_GRADER security key.

QA graders page is to provide graders with a tool that will help them in finding which calls are to be graded. Calls can be searched by specifying several targets: per client, per queue, per agent and per agent group. Each target can be expressed as an absolute minimum, a percentage or both.

Default targets are expressed:

-

Per queue or set of queues

-

Per location

-

Per individual agents (that is, the minimum required for each agent)

-

Per call outcome (see Configuring call outcomes )

-

Per agent or agent groups (see Configuring agent groups )

Targets can be disabled as well, by leaving them empty.

All grading activity is done per form, that is, related to one specific form.

The QA personnel is supposed to input the right targets given the goal at hand (eg. daily or weekly, per client or per queue, etc).

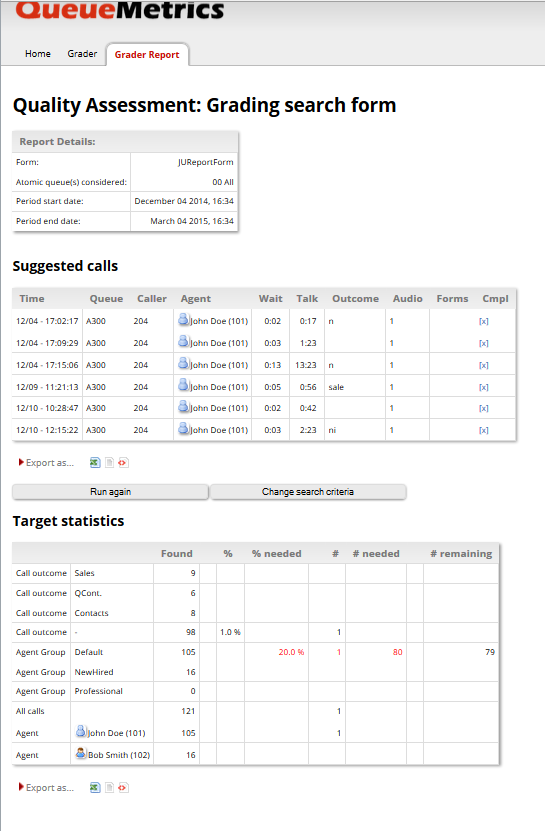

When they do, QueueMetrics will run a report that displays the absolute numbers involved and the number of targets that are currently being hit. At the top of the page, QueueMetrics will then display a random selection of calls to be graded, weighted on the number of targets each call hits (i.e. the more targets a call hits, the more likely it is to appear in the list).

The grader may do grading on some or all of the suggested calls, or may decide to do differently. When done, he clicks on "Run again"; all the form is computed again, targets are recomputed and so is the set of possible calls.

Selecting which calls to grade

Each call included in the search criteria per time period or queue is first listed. The set of distinct agents involved in the queue is obtained.

Calls are then filtered off if they match the following rules:

-

They have already been graded for this form

-

They have no audio recording

-

They will help no target

For each of the remaining calls, a score is computed by assigning one point to each of the criteria that are currently unmet absolute number and percentage are treated as distinct criteria). Calls are then weighted based on the square of their score (that is: if a call will help 10 targets, its relative score will be 100, while if it helps in 5 targets, the relative score will be 25), and extracted randomly, so that it’s more likely for QueueMetrics to draw calls that match multiple criteria, but even a call matching one single criteria may appear. As the computations involved are quite heavy (mostly when deciding if a call has associated audio and QA scoring, as this might be repeated tens of thousands of times) each grader will have a small set to use before reloading.

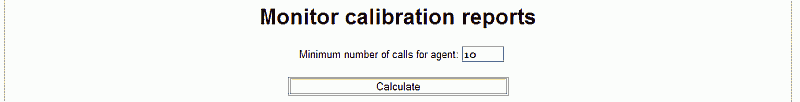

Grader calibration reports

This is a separate, one-page report that is only accessed by supervisors earmarked by the key "QA_CALREP" only (in addition to "QA_REPORT").

To access the page, you go to 'Quality Assessment' → 'Run QA Reports' and fill-in the form by the bottom of the page :

You will also use the form by the top of the page you usually use for QA reports.

On the input page you select:

-

A date range (dual selector plus predefined periods)

-

One Form (if we had an option for All, we’d get way too many)

-

A queue or composite queue

-

A call outcome, or none for all calls

-

It is possible to select an agent group as an additional filter.

-

It is possible to add a minimum threshold of graded calls per grader to be included

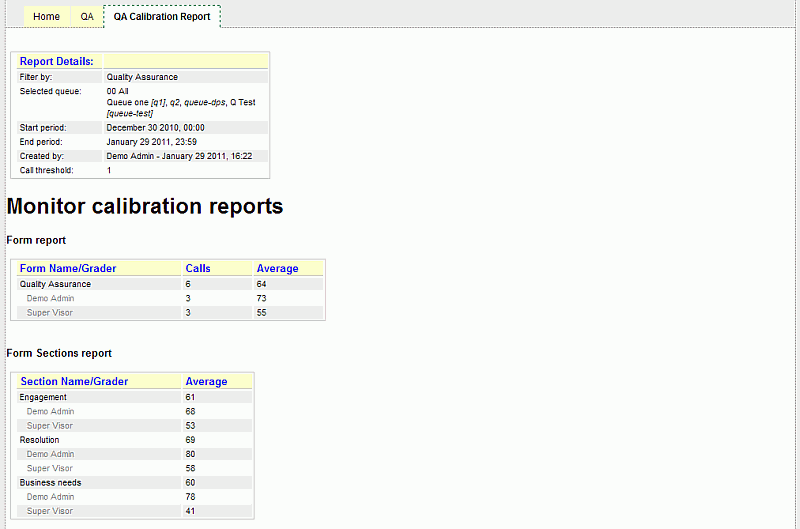

The analysis happens at three levels:

-

The whole form

-

The section level

-

The question level

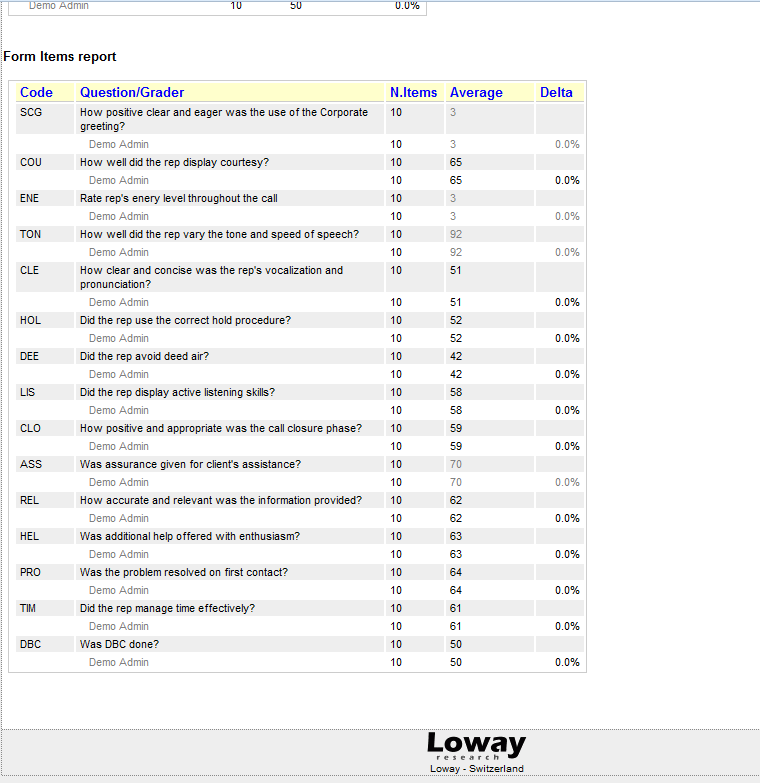

For each form/section/question, a table is computed for the general and for each agent that has graded at least X items:

For each form/section/question, an average is computed and compared to the one of all graders who graded at least X calls in the specific area. This way it is easy to spot trends and anomalies on grading behavior. Values shown in gray refers to non scorable questions. Average for this type of questions is counted only for reference purposes.