Quality Assessment in QueueMetrics

QueueMetrics includes a Quality Assessment (QA) module that lets you:

-

Define a set of metrics to be used for call grading

-

Have the QA team grade calls while they’re being processed or from historical recordings

-

Run complete reports by queue and by agent

Enabling QA monitoring

In order to use QA monitoring, you should have the following security keys assigned:

-

QA_TRACK: this key means that the person can input QA data. If this person has the keys to access historical calls or real-time calls, he will be able to fill-in QA forms. Individual forms can be further restricted by key-protecting them

-

QA_REPORT: this keys means that the holder can access QA reports. Individual forms can be further restricted by protecting them with a reporting key as well.

-

USR_QAEDIT: this key means that the holder can modify and create QA reporting forms.

Understanding Quality Assessment

The QA module in QueueMetrics was built in order for a specific QA supervisor to track the performance of agents on a given set of metrics. Each metric is expressed as a long description and has an unique engagement code (a short acronym up to 5 letters).

Metrics are user-definable and are clustered together in forms; a form can hold up to 130 metrics divided in up to 10 metric groups.

A single reporter can grade a call only once for each defined form; any attempt to grade a the same form for the same call multiple times will not be accepted.

For security reasons, call grading data cannot be modified once input, and forms with live data associated to them cannot be deleted from the system. In order to have a reduced set of metrics available if you use successive versions of a form over the period, it is possible to close a form, i.e. to avoid further input. Deletions, if any, will be performed at the database level by the system administrator.

Grading data is expressed as integer numbers between 0 and 100; grading all fields is mandatory, except for fields marked as "optional" in the form definition. The QA team can also input free text comments linked to a specific call.

It is possible to edit thresholds for different levels of QA grades, e.g. 0-25: Issue, 26-50: Improvement required, 51-75: Meets expectations, 76-100 Exceeds expectations. These values can be defined on a form-by-form basis, and make it possible to count the number of items that belong to each category and to use a colour code for immediate graphic representation.

Grading calls

Grading data can be input while listening to the live call (Unattended monitoring) or while looking at the historical call details or through a particular formatted URL string.

Grading historical calls

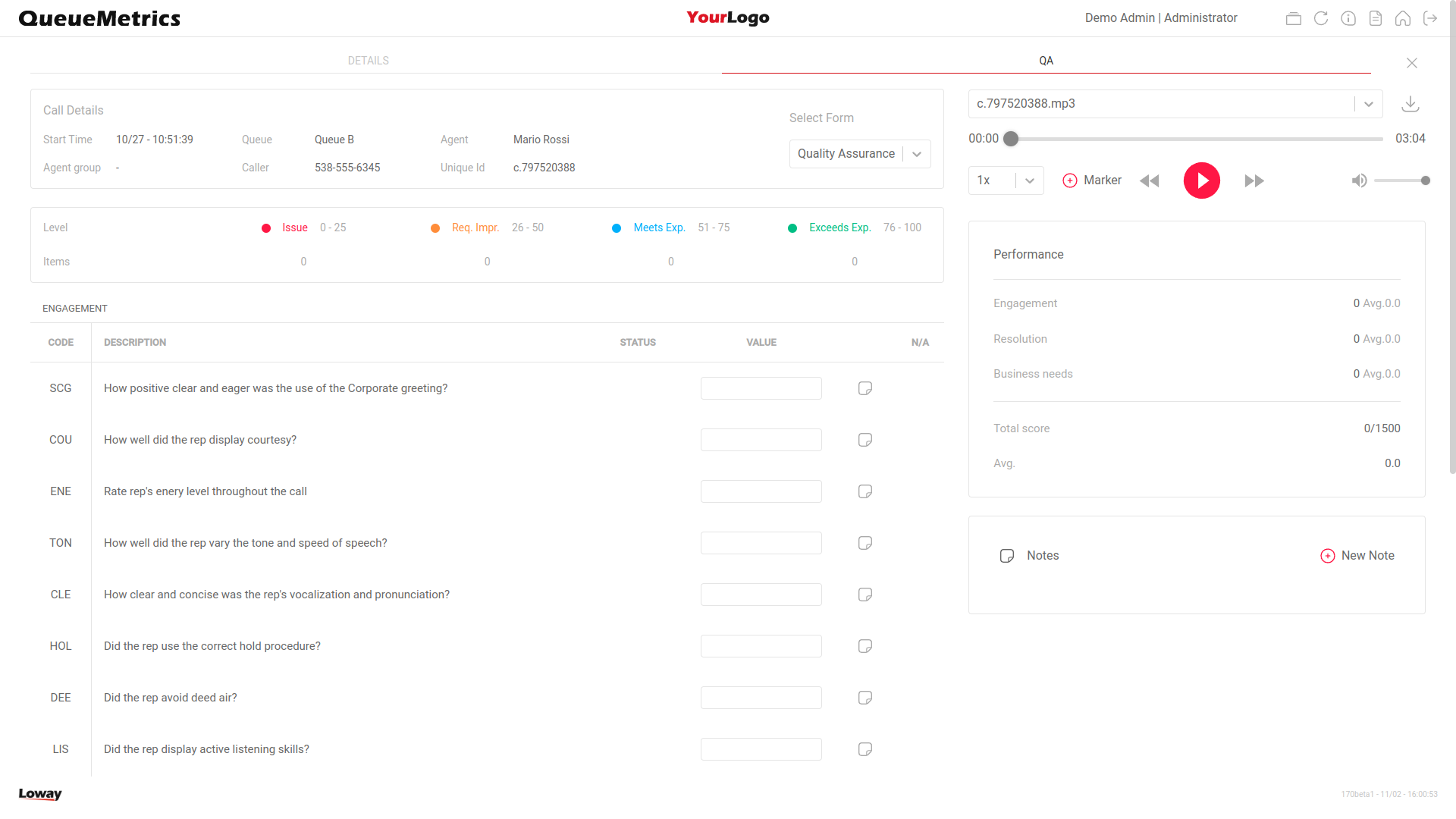

In order to do the grading of historical calls, you proceed as is the case for audio recordings. If QA grading is enabled, the QA Form will appear in the QA tab, as in the picture below.

The input form

If multiple QA forms are available for this QA person, they will be able to select the correct form by selecting the "Input form" field on the top right.

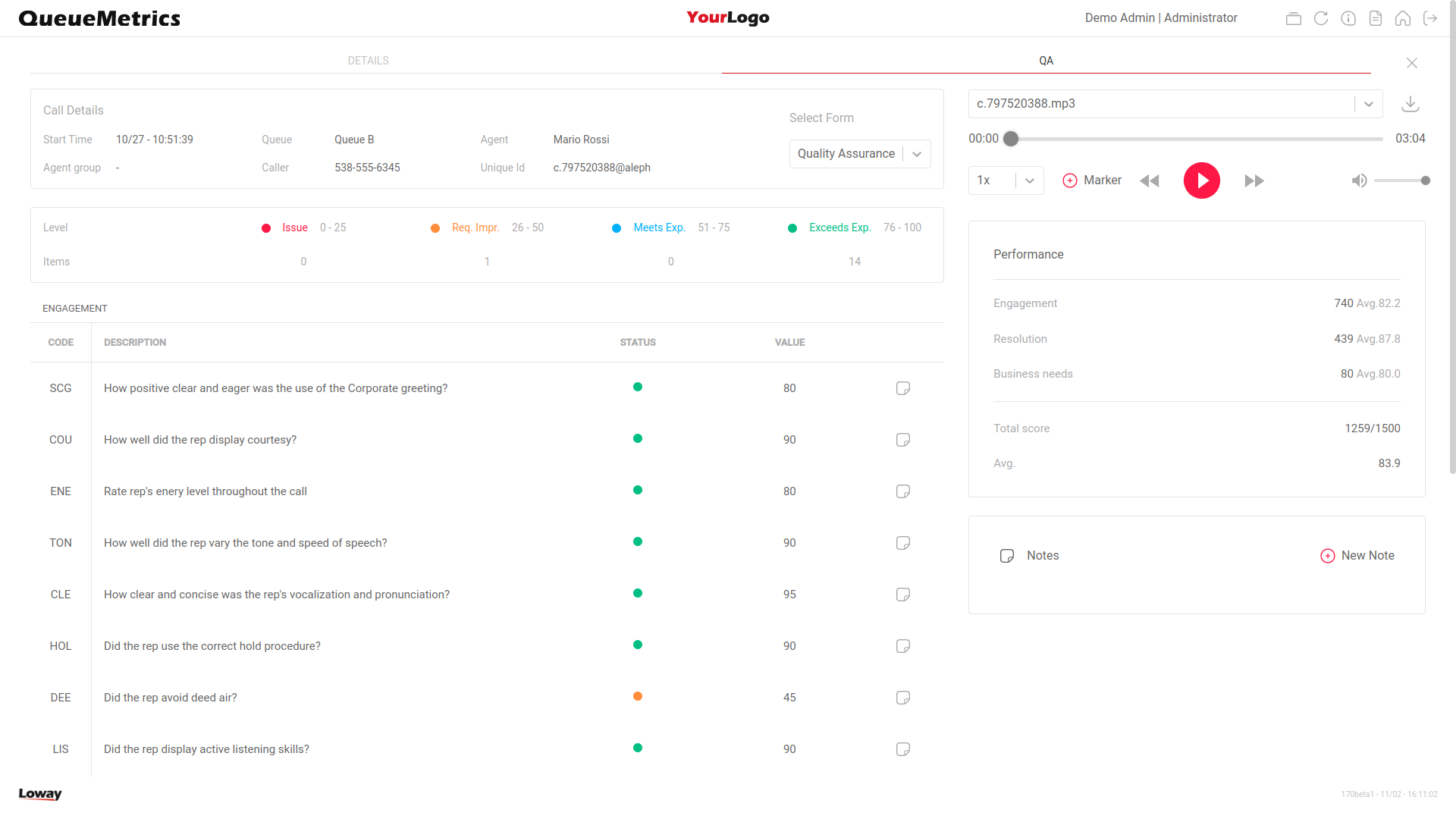

The top-left box shows the current threshold values for each levels (please note that the label associated to each level is form customizable from the form configuration page. See Configuring QA forms for further information). While you input data into the form, you can see that the number of items that fall into each category and the average and total scores are updated in real-time.

| For each section is reported the overall section score and average. Zero values are marked in red. This simplifies the discovering of session shortcuts (See Configuring QA items for further details) for already scored forms. |

At the bottom of the form is the button Save, that is used to exit the form saving changes. This button will appear only when data are in a consistent state that allows saving.

If you load a saved form, it will be shown in read-only mode.

On the bottom part of the form there are the different items to be graded, grouped into a set of categories. If a box contains invalid data (i.e. something that is not a number between 0 and 100 included) it will be displayed in red and the form won’t let you save.

Following the form definition, items can be graded by:

-

inserting a score value

-

selecting the appropriate value from the dropdown menu

-

checking the proper Yes/No options.

Items that are not mandatory have an associated N/A checkbox; when checked, it disables the related score value and lets the user save a form without specifying any score for that item. If all fields within a given section are defined as N/As, then the Overall Performance will display the entire section with an N/A Average total value.

If an item is a failed shortcut item; that is, if a shortcut item totalizes a score that falls into the "Issue" category, the overall form score will be set to 0.

The value set in some items may control the set of items that are enabled for the current form; that’s why the form is evaluated again after each user input.

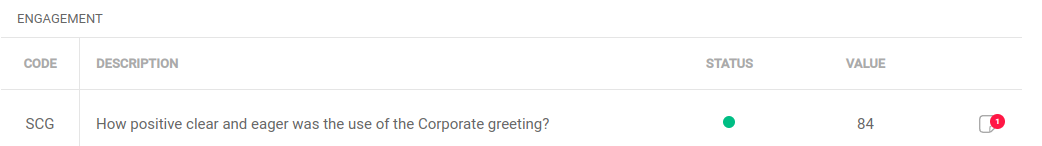

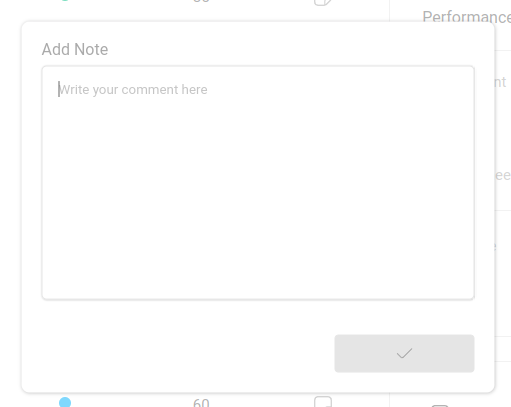

Is possible to assign a free text note or a set of notes to each question in the form. We call this type of notes as "per-question" notes. Per-question notes could be added clicking on the icon on the right side of each question and typing the text in the shown popup. Questions with already associated per-question notes are marked with a different icon as per the following figure:

For a thorough description of how Forms and Items can be set up, please see the chapter Configuring QA forms .

When a form is saved, it appears as per the following figure:

It basically shows the same data that was input, but it cannot be changed anymore (except for users holding specific keys as defined by Removing or Editing QA forms) and the supervisor information is shown. If there are known audio recordings for this call, they are shown in the top-right.

Pressing the Add Note button, a modal will appear, letting you enter a note:

The user can insert a note that will be saved by pressing the "Add" button; all comments already added are listed in chronological order together with the per-question notes inserted for a set of specific form. Per-question notes associated to the selected form are shown at the beginning of the comments list.

For each call it is possible to add markers which can be created and deleted as required, in order to keep further details regarding that specific call. This works best in conjuction with the HTML5 audio player as described on the HTML5 Player.

| Is not possible to submit partial forms. |

Grading over HTTP access

Is possible to grade a particular call through an HTTP request to the QueueMetrics server with an URL specifically formatted for this purpose.

When an URL is typed in the browser, QueueMetrics redirects its output to the login page (if required) where the user could log in to continue.

QueueMetrics shows the grading input form in the browser window and the user can grade the call and/or add notes to it.

The URL to be used to trigger the grading procedure should follow the syntax below:

http://qmserver.corp:8080/queuemetrics/qm_qa_jumptogradepage.do? QAE_astclid=1286184814.122 &QAE_queue=queuename &QAE_formName=FormToBeGraded &QAE_CallStartDate=2010-10-04.11:00:00

(of course the URL should appear all on one line).

In the example, we trigger a grading procedure on the host 'qmserver.corp' on port 8080 The context is queuemetrics (but could change based on local install). Then there are some parameters following:

-

QAE_astclid : specifies the Asterisk unique id for the call to be graded

-

QAE_queue : specifies the queue name where the call has been taken

-

QAE_formName: specifies the name of the form to be graded

-

QAE_CallStartDate: specifies the day where the call has been taken. The value should be formatted as YYYY-MM-DD.hh:mm:ss and should represent the time before the call (it’s not important to specify the exact time where the call has been taken but it’s important to specify a time near the period before the call).

Removing or Editing QA forms

Users holding the key 'QA_REMOVE' can delete a form.

When a form is deleted, their content is dumped on the Audit Log.

All accesses on deleted forms are highlighted by a special message shown in the form.

After deleting a form, it is again possible to grade a call as if it was never graded before.

Users holding the keys 'QA_REMOVE' and 'QA_REPLACE' can edit an already submitted form. By editing an already submitted form, QueueMetrics performs a normal delete action, then shows to the user a new editable form with pre-filled values in each row. Data are processed by following the usual procedure.

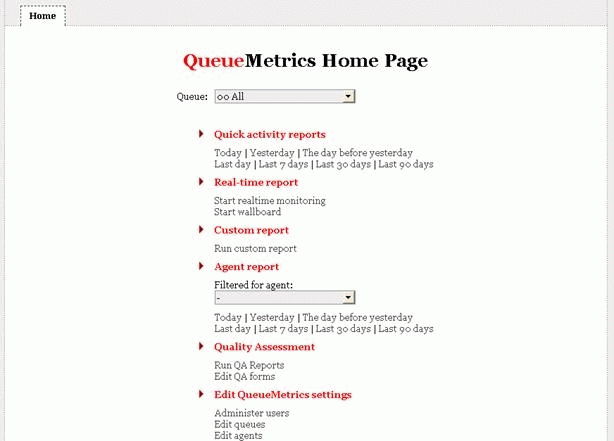

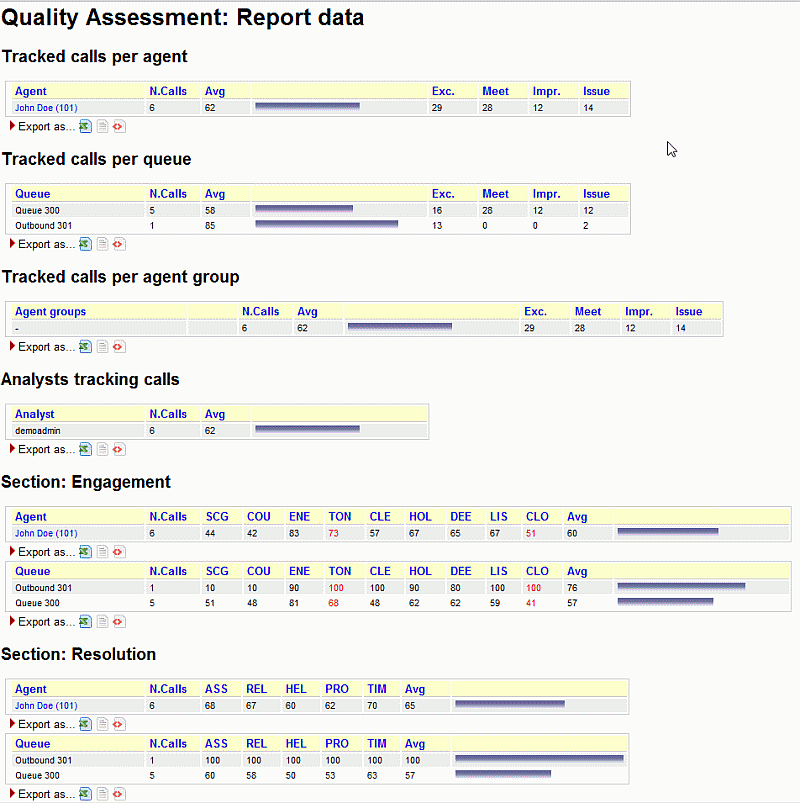

Running QA reports

In order to run QA reports, you must go to the main page of QM and click on the "Run QA forms" label.

The system will show the following form:

The parameters have the following meanings:

-

Form is the name of the form you want to run a report for

-

Queue can be one or more queues. You can the run different reports for different queues, or use a catch-all queue

-

Agent is an optional Agent filter

-

Location is an optional Location filter

-

Agent Group is an optional Agent Group filter

-

Grader is an optional parameter that filters by the person who compiled the form

-

Supervision is an optional Supervisor filter

-

Outcome is an optional call outcome code filter

-

Asterisk call-id is the unique-id of the call

-

Caller is the caller’s number (exactly as it appears on the caller-id, with no rewriting)

-

Start Date and End Date are about the start time of the calls which QA forms that will be included in the report.

By clicking on "Calculate" or "Show Summary" the actual results are shown. If multiple search parameters are set, they must be all valid on the result set.

If you have used Extra Scores (see Chapter 20.9 - 'Configuring QA forms') within the QA form, the "Calculate" or "Show Summary" might return averages that are higher than 100.

It is also possible to run a report that compares graders to each other - see Grader calibration reports.

| The fields Asterisk call-id and Caller always consider your query as a case-insensitive substring to be matched, so e.g. if you have a call with a unique-id of '567890.1234', entering '.1234' will be enough to match it. You can also use '_' (underscore) to match any single unknown character or '%' (percentage) to match zero or more occurrences of any character. |

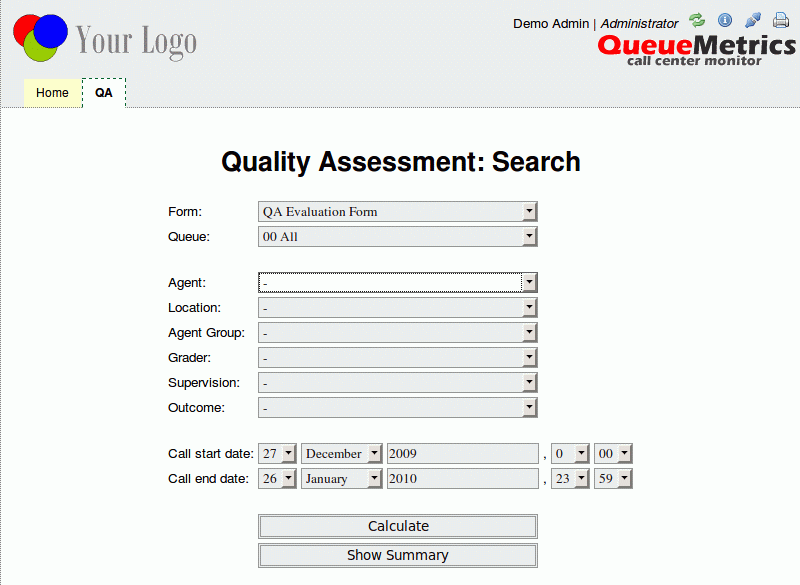

The main QA report

The button "Calculate" shows a report like:

The Tracked calls per agent report shows:

-

The total number of calls that were tracked for each agent

-

The average score for each agent

-

The total number of items that fall into "Exceeds expectations", "Meets expectations", "Improvement required" and "Issue" for each agent.

As you can see, the names of each agent are clickable in order to obtain a detail of calls by agent.

All statistics that are computed per-agent are then recomputed per-queue and per-agent-group.

The Analysts tracking calls reports shows how many calls each supervisor graded and what was the average score that this supervisor gave.

Then, for each Section defined in the QA form, you will get the average scores for each item, plus an average of all average scores in order to point out problems.

If an item is shown in red, it means that such item has been assigned a zero-weight value. If an item is shown in gray, it means that such item has been set as non scoreable item. For further information on configuring items within the form, refer to the paragraph Configuring QA items.

All columns can be sorted by clicking on the item name and all data can be downloaded in Excel, CSV or XML format.

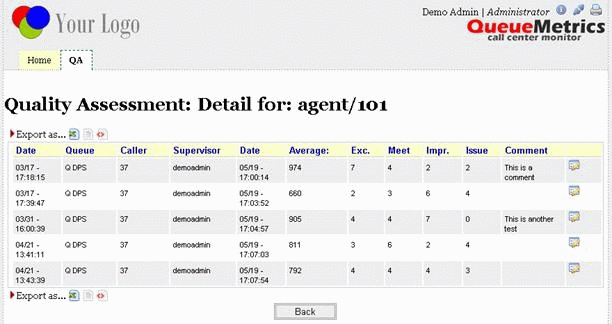

In order to have a better understanding of what is going on, you can click on an agent’s name and get the details, as below:

This shows the details of all calls stored, the number of items for each call that fall into each grading category, the average rating for each call and the comment.

By clicking on the form icon

on the right, you can access the QA form that was graded for this calls,

so you can access individual scores and listen to

audio recordings that are related to this form.

on the right, you can access the QA form that was graded for this calls,

so you can access individual scores and listen to

audio recordings that are related to this form.

The QA Summary report

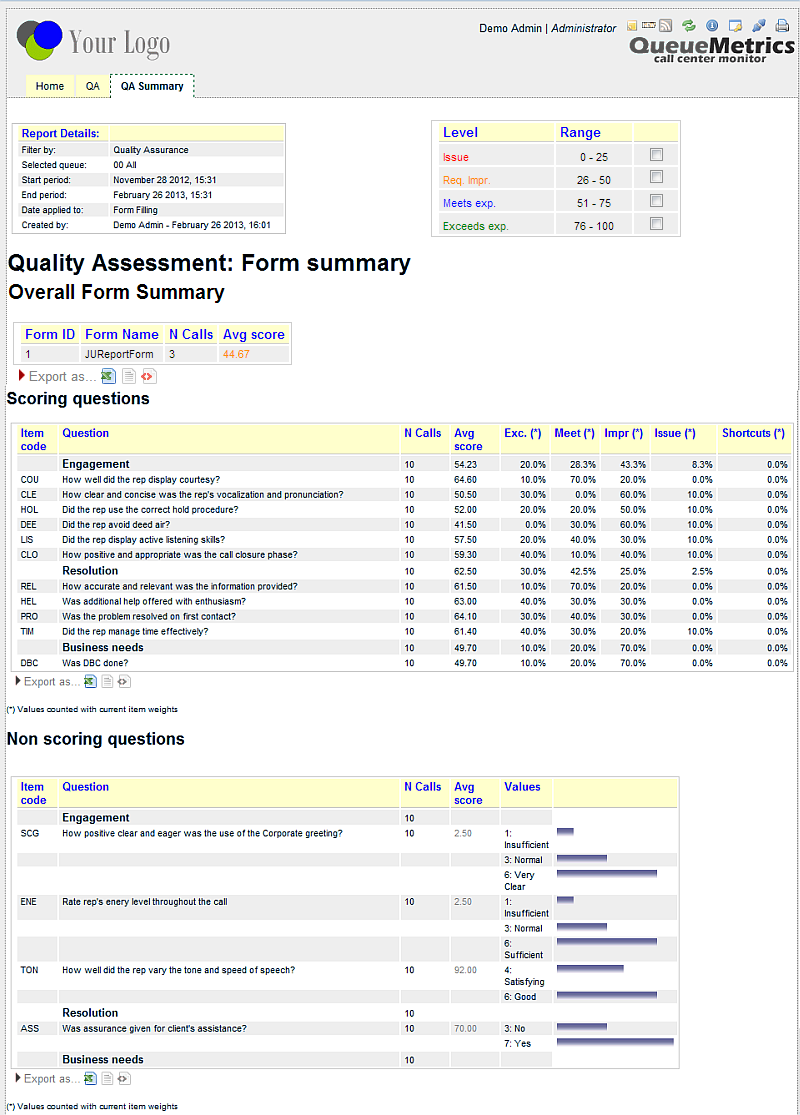

The "Show Summary" button shows a report like:

This report calculates, for each item and for each section in the form:

-

average for the whole form

-

number of calls graded

-

average score and cumulated percentage for each item and section that "Exceeds expectations" (the column marked "Best") or "Meet expectations" (Good) or "Improvement required" (Ok) or "Issue" (Req.Imp.) (only for scoreable item)

-

average score and number of time each value is found in the reports (only for non scoring questions)

-

cumulated percentage for each item marked as "shortcut"

All values are computed accorded to the currem item weights (only for secoreable items), in case you use weighted items.

The data can be exported to Excel, CSV and XML formats.

On the top right of the report is a box containing the list of levels with related checkboxes. Values shown in the result table are coloured following the checboxes status. This is usefull to hilite questions where average values fall in a specific set of levels. The status of checkboxes are persisted through sessions.

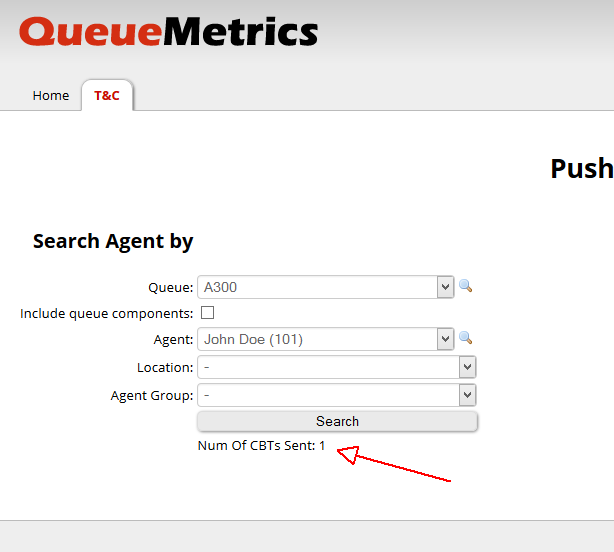

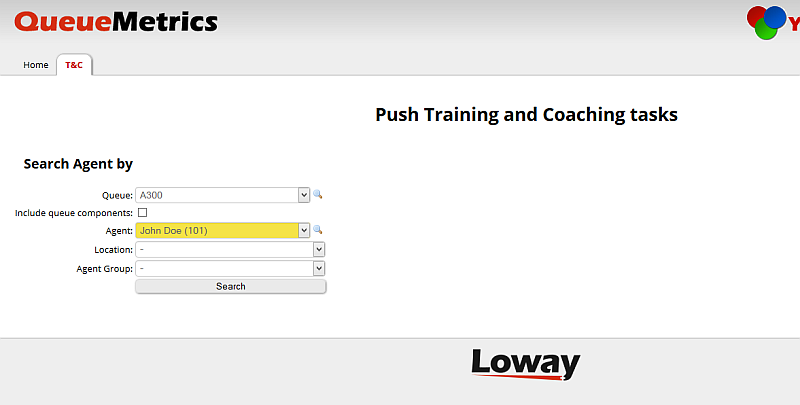

The Training And Coaching Page

Users holdint the security key TASK_PUSH_TC will be able to access to the Training and Coaching Page. This page is the main access point for sending CBTs and Meeting (also named Coaching) tasks to an agent or a set of agents. It’s based on a three steps wizard where the list of users are defined in the first two steps and the proper CBT or Meeting tasks are sent in the third step. Agents selection starts by several finding criterias, as depicted below:

-

The queue dropdown

-

If the finder should take care about queues components (this is useful when a composed queue is selected in the previous dropdown)

-

An agent dropdown

-

A location dropdown

-

An agent group dropdown

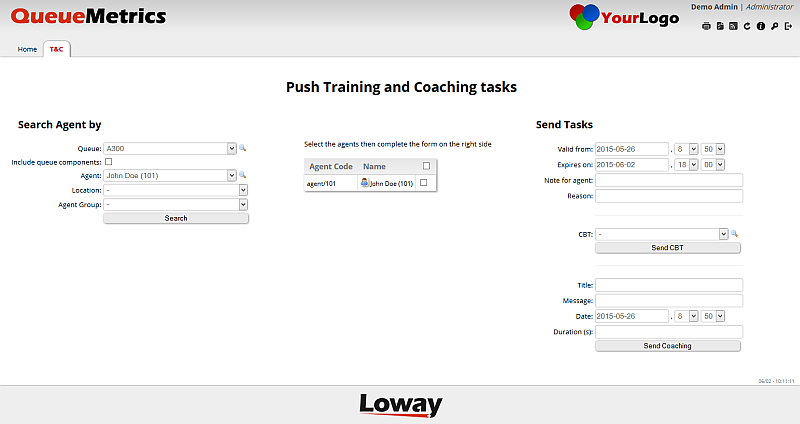

Each agents mathing the criterias are shown as soon as the user presses the "Search" button, as reported in the picture below.

Looking at the list of agents found in the center of the page, a single agent can be selected by clicking on their related checkbox whether all agents in the list can be selected by clicking on the checkbox placed on the header of the list.

As soon as agents are selected, the user can send a CBT or a Coaching task by properly filling the fields related to time and date validity and, optionally, a note that will be shown to the agents and a reason (hidden to the agents). Specific fields for CBTs and for Coaching tasks can be found on the most right-bottom side of the page. Tasks can be sent by pressing "Send CBT" or "Send Coaching" buttons.

At the end of process, QueueMetrics shows the number of CBT/Coaching tasks sent as reported by the following picture.